Running Local LLMs Using Ollama

If you want to run a local LLM, Ollama is one of the easiest tools available. It allows you to run various models directly on your hardware, assuming your system meets the requirements.

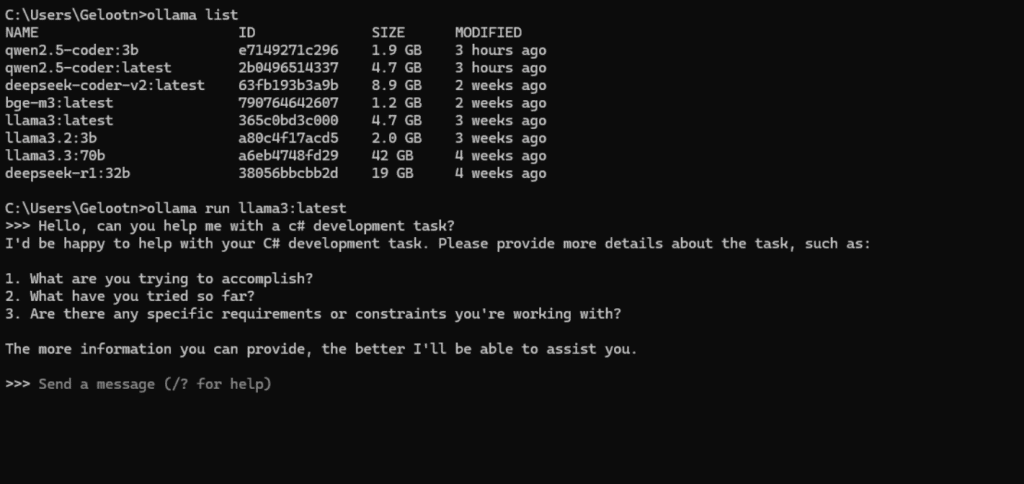

To get started, simply visit the Ollama website and download the application for your platform. Once installed, you can start a model using the command line:

ollama run llama3:latestThis command will download and start the llama3 model (or any other model you specify). It’s a quick way to get a local LLM up and running.

Interacting with the Model

Command Line Interface

Ollama provides a simple command line interface (CLI) that allows you to interact directly with your locally running LLMs. This interface is especially useful for quick testing, scripting, or minimal setups where you don’t need a graphical user interface.

After starting a model using a command You’ll enter an interactive prompt where you can type in questions or prompts, and the model will respond in real-time. It works much like a chat session, but purely in your terminal.

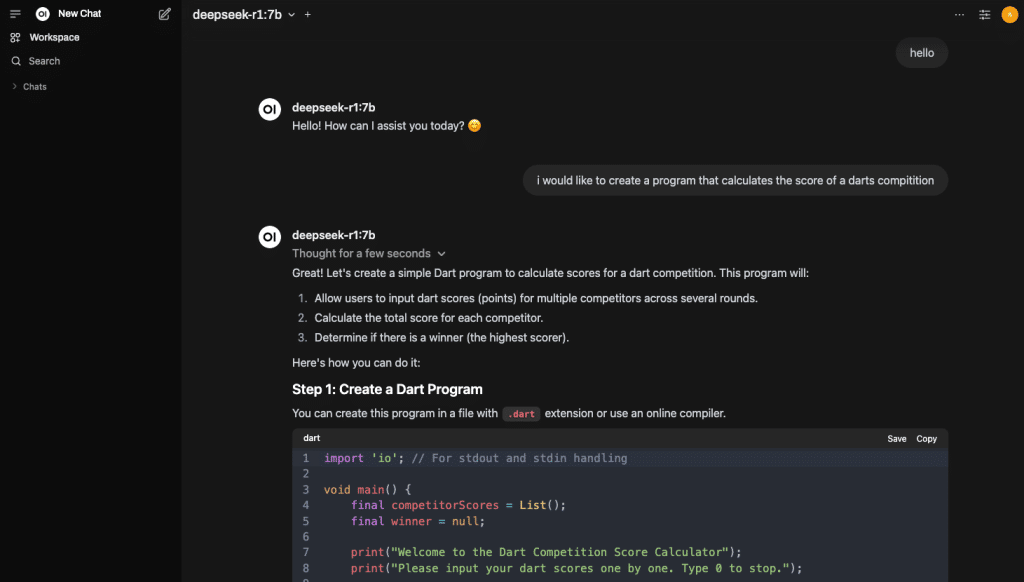

Open WebUI

For a more user-friendly experience, you can use Open WebUI. This open-source interface provides a ChatGPT-like experience in your browser, complete with conversation management and chat history.

You can run Open WebUI in a Docker container and configure it to connect to your locally running Ollama instance.

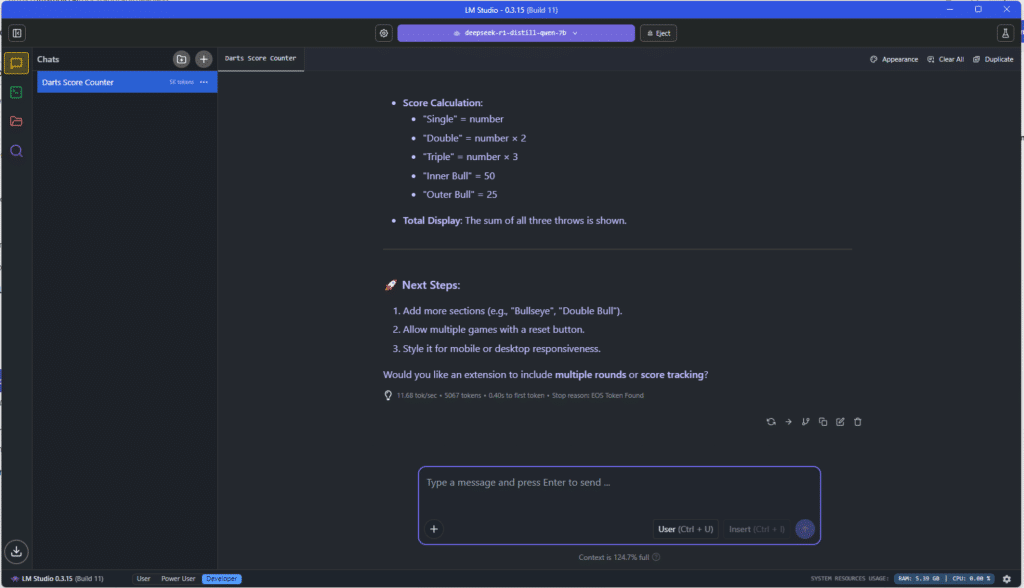

LM Studio

LM Studio provides a desktop application that offers a more intuitive and feature-rich experience compared to other methods of running local LLMs. With no need for additional web interfaces or complex configurations, it allows you to easily interact with the same models available through Ollama, all within a polished graphical user interface.

This streamlined setup is ideal for those who want a quick, hassle-free way to run and manage models. LM Studio simplifies the process, offering a complete environment for LLM experimentation, making it accessible to developers and casual users alike. For more details, visit the LM Studio website.

Using Local LLMs as a Copilot in Your IDE

Depending on your IDE, there are multiple ways to integrate local LLMs and turn them into a coding assistant or “copilot”.

JetBrains AI Assistant

JetBrains’ AI plugin supports local models and allows you to configure the assistant to run purely offline. If you’re a JetBrains Ultimate subscriber, the AI Assistant comes included. It brings full AI functionality to supported IDEs—now with local model support.

Proxy AI Plugin (JetBrains Ecosystem)

Proxy AI is a third-party JetBrains plugin that connects seamlessly with your local Ollama setup. Just load your desired model and start using the assistant. It offers:

- Full chat interface

- Code completion

- Name suggestions

- Context-aware suggestions using files, folders, and Git commits

The local LLM features are free to use. A subscription is only required for access to advanced online models.

Visual Studio Code

VS Code also has several community plugins for integrating local LLMs. One promising option is VSCode Ollama, although the current UI is in Chinese, which may be a limitation for some users.

Conclusion

As AI tools become more integrated into the software development workflow, the ability to run large language models (LLMs) locally is a game-changer. Tools like Ollama, Open WebUI, LM Studio, and IDE plugins now make it not only possible but practical to run these models entirely on your own machine.

Why Go Local?

1. Zero Cost at Runtime

Running models locally means no subscription fees, no pay-per-token usage, and no API rate limits. Once the model is downloaded, it’s yours to use as much as you want. For hobbyists, open-source contributors, and cost-conscious teams, this eliminates the barrier of ongoing cloud service expenses.

2. Total Control Over Your Data

Privacy is a growing concern—especially in regulated industries or projects involving proprietary code. With local models, your code never leaves your machine. There’s no risk of sensitive data being logged, stored, or analyzed by a third-party provider. This is crucial for working on internal tools, client codebases, or anything under NDA.

3. Offline-Ready

Local LLMs work even when you’re disconnected. Whether you’re on a plane, in a secure facility, or simply experiencing flaky Wi-Fi, your AI copilot remains available. That kind of reliability can be a huge productivity booster.

4. Performance & Customization

With local access, you can optimize your environment for speed, experiment with fine-tuning, or swap between models depending on your task. You’re no longer locked into the limitations of a proprietary platform.